Conventional interfaces for computers and other electronics only allow humans to operate devices using their fingers. Recent technological advancements, however, have opened valuable opportunities for the development of alternative interfaces that use voice, eye blinks, facial movements, or other body movements.

These alternative interfaces could transform the lives of people with disabilities that prevent them from freely moving their hands. This includes people who are partially or totally paralyzed, as well as patients affected by dystrophy, multiple sclerosis, cerebral palsy, and other neurological conditions that affect hand movements.

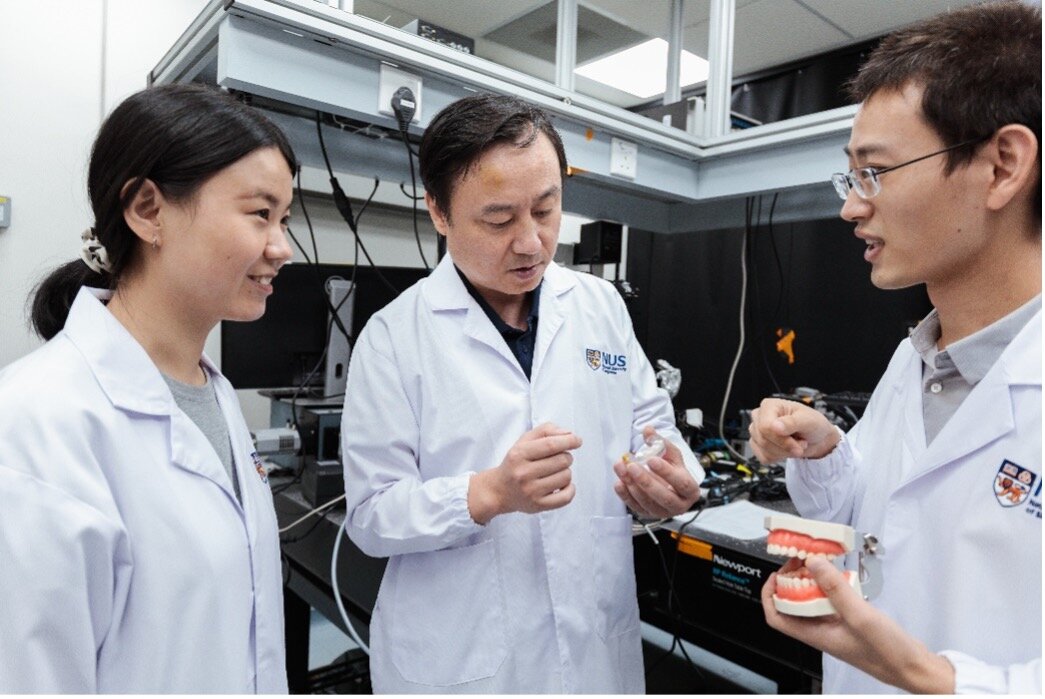

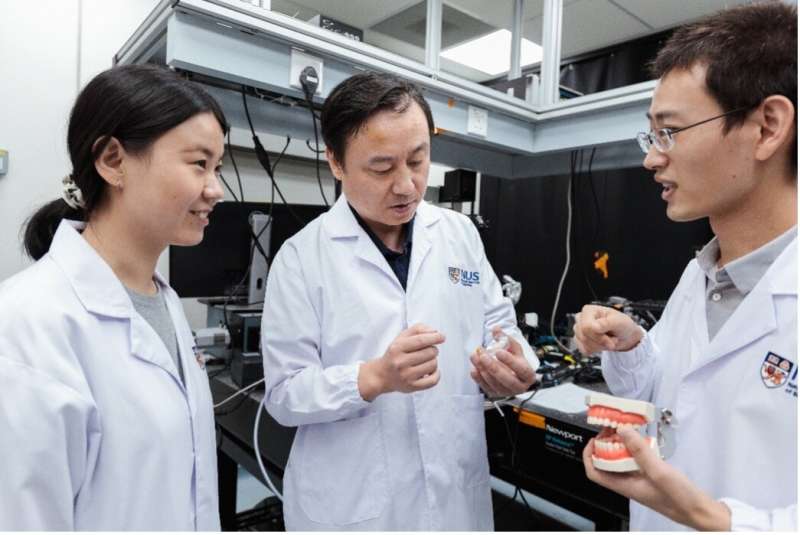

A team of researchers led by Xiaogang Liu at the National University of Singapore and Bin Zhou at Tsinghua University, have recently developed an innovative and interactive mouthguard that allows users to control and operate devices by biting. This mouthguard, introduced in a paper published in Nature Electronics, is based on a series of mechanoluminescence-powered optical fiber sensors.

“Our work was inspired by dental diagnostics,” Bo Hou, the first author of this paper, told TechXplore. “We had thought of combining optical sensors and mechanoluminescent materials to detect the occlusal force of teeth. We then realized it might be possible to control human-computer interactions through unique occlusal patterns.”

To create their interactive mouthguard, the research team used a series of mechanoluminescence-powered distributed-optical-fiber (mp-DOF) sensors. These sensors consist of an array of elastomeric waveguides embedded with mechanoluminescent contact pads containing different colored pressure-responsive phosphors.

The researchers embedded two of these sensors into a mouthguard, a covering that protects humans from injury during sports or prevent teeth grinding. The phosphors contained in the sensors are sensitive to mechanical stimuli, which ultimately made it possible to create a bite-controlled interactive telecommunication system.

“Compression and deformation of the elastomeric mouthguard at specific segments during occlusion is mapped with the mp-DOF by measuring the intensity ratio of different color emissions in the fiber system, without the need for external light sources,” Liu explained. “The ratiometric intensity measurements of complex occlusal contacts can be processed using machine learning algorithms to convert them into specific data inputs for high-accuracy remote control and operation of various electronic devices, including computers, smartphones and wheelchairs.”

The researchers combined their mouthguard-based device with machine learning algorithms trained to translate complex bite patterns into specific commands or data inputs. In initial evaluations, their system could successfully translate bite patterns performed by users into data inputs with a remarkable accuracy of 98%.

“Several assistive technologies, such as voice recognition and eye tracking, have been developed to provide alternative methods of control,” Liu said. “However, these may cause problems in use and maintenance. We report the first bite-controlled optoelectronic system that uses mechanoluminescence-powered sensors with distributed optical fibers integrated into a mouthguard.”

The interactive mouthguard-based interface created by the researchers could soon help improve the lives of people with limited dexterity, providing a viable alternative to keyboards or touch screens and thus allowing them to independently operate communication devices. Liu and his colleagues have already shown that their interactive mouthguard can be integrated with computers, smartphones and wheelchairs.

“In the future, we will try to explore opportunities to validate our device in a clinical setting, such as in care centers or nursing homes,” Liu added. “We are also developing the second generation of the smart mouthguard to improve the speed of control and the training time for the user.”